Aspire Thought Leadership! Wondering What is machine learning text recognition? Find out more on machine learning text recognition and how fortune 500

We are all familiar with the convenience of having an editable text document that can be easily read by a computer and the information can be used for a variety of uses [impact of ai in business]. People always wanted to use the text that is present in various forms all around them, such as handwritten documents, receipts, images, signboards, hoardings, street signs, nameplates, number plates of automobiles, as subtitles in videos, as captions for photos, and in a variety of other ways. However, we are unable to make use of this information because our computer is unable to recognize these texts purely based on their raw images. Hence, researchers around the world have been trying hard to make computers worthy of directly recognizing text by acquiring images to use the several information sources that could be used in a variety of ways by our computers. In most cases, we have no choice but to typewrite handwritten information, which is very time consuming. So, here we have a text recognition system that overcomes these problems. We can see the importance of a ‘Text Recognition System’ just by having to look at these scenarios, which have a wide range of applications in security, robotics, official documentation, content filtering, and many more [artificial intelligence business].

|

| Machine learning text recognition |

Due to digitalization, there is a huge demand for storing data [what is big data?] into the computer by converting documents into digital format [What is data science?]. It is difficult to recognize text in various sources like text documents, images, and videos, etc. due to some noise. The text recognition system is a technique by which recognizer recognizes the characters or texts or various symbols. The text recognition system consists of a procedure of transforming input images into machine-understandable format.

The use of text recognition has a lot of benefits. For example, we find a lot of historical papers in offices and other places that can be easily replaced with editable text and archived instead of taking up too much space with their hard copies. Online and offline text recognition are the two main types of recognition whether online recognition system includes tablet and digital pen, while offline recognition includes printed or handwritten documents.

|

| Deep learning based text recognition |

Machine learning text recognition

In machine learning text recognition, to recognize text in images, the first step is to detect and identify a bounding box around each character of text areas in the image and the second step is to figure out what the characters are. An example of text recognition is given below (See Figure 1).

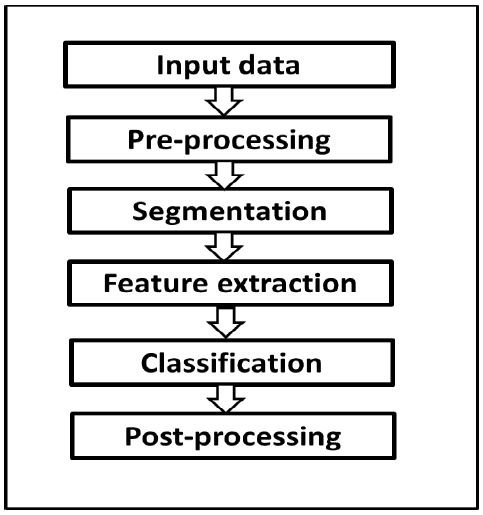

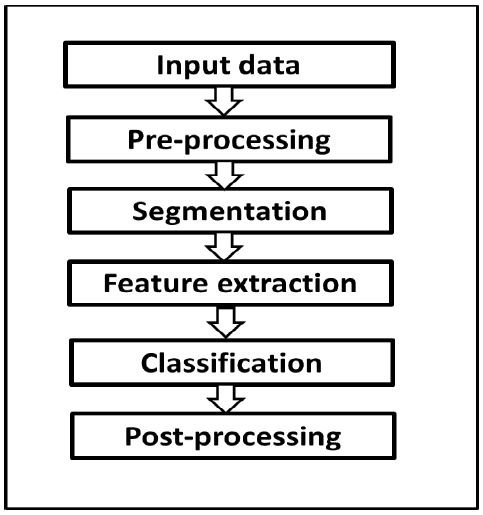

A text recognition procedure is carried out by some steps such as preprocessing, segmentation, feature extraction, classification, and postprocessing.

Preprocessing

This initial phase is required to enhance the quality of the input image by doing some operations like noise elimination and normalization, etc. and it is also good for improving recognition rate.

Segmentation

It is a crucial phase in recognition. The process of segmentation is to segment the input image into single characters. It gives separation among the individual characters of the input image.

Feature Extraction

This phase extracts important information from input images by applying different feature extraction techniques such as histograms, etc.

Feature extraction aims to represent data effectively by which recognition rate increases.

Classification

This phase is the decision-making phase which compares the extracted input feature to the stored pattern, and assigns them into the correct character class. ANN or SVM classification techniques are used as trained classifiers.

Post-Processing

This last phase improves the recognition rate by filtering and correcting the output obtained by the classification phase. Some postprocessing operations are formatting, spell-checking, and correction of data [Data science workflow].

|

| General diagram of text recognition steps |

Approaches for Machine learning text recognition

Machine learning’s objective approach is to solve the complexity of the sophisticated factors of the input through using high–level features [

Machine Learning Introduction]. Machine learning technology is based on the idea that nothing fundamentally challenges systems to improve performance, e.g., machine handwriting recognition achieves human quality standards [

supervised machine learning]. Many types of structures and algorithms can be used to formulate the deep learning concept [

unsupervised machine learning].

Convolutional Neural Network (CNN)

CNN is a method of

machine learning algorithms that is specifically trained to perform with image files. A simple class that perfectly represents the image in CNN, processed through a series of convolutional layers, a pooling layer, and fully connected layers. CNN can learn multiple layers of feature representations of an image by applying different techniques. Low-level features such as edges and curves are examined by image classification in this method and a sequence of convolutional layers helps in building up to more abstract. CNN provides greater precision and improves performance because of its exclusive characteristics, such as local connectivity and parameter sharing. The input layer, multiple hidden layers (convolutional, normalization, pooling), and a fully connected and output layer make up the system of CNN. Neurons in one layer communicate with some neurons in the next layer, making the scaling simpler for higher resolutions.

In the input layer, the input file is recorded and collected. This layer contains information about the input image’s height, width, and several channels (RGB information). To recognize the features, the network will use a sequence of convolutions and pooling operations in multiple hidden layers. Convolution is one of the most important components of a CNN. The numerical mixture of multiple functions to produce a new function is known as convolution. Convolution is applied to the input image via a filter or, to produce a feature map in the case of a CNN. The input layer contains n×n input neurons which are convoluted with the filter size of m × m and return output size of (n – m + 1) × (n – m + 1). On our input, we perform several convolutions, each with a different filter. As a result, different feature maps emerge. Finally, we combine these entire feature maps to create the convolution layer final output. To reduce the input feature space and hence reduces the higher computation; a pooling layer is placed between two convolutional layers. Pooling allows passing only the values you want to the next layer, leaving the unnecessary behind.

This reduces training time, prevents overfitting, and helps in feature selection. The max-pooling operation takes the highest value from each sub-region of the image vector while keeping the most information, this operation is generally preferred in modern applications. CNN’s architecture, like regular neural network architecture, includes an activation function to present non-linearity into the system. Among the various activation functions used extensively in deep learning models, the sigmoid function rectified linear unit (ReLu), and softmax are some wellknown examples [

deep learning recommender system]. In CNN architecture, the classification layer is the final layer. It’s a fully connected feed-forward network that’s most commonly used as a classifier. This layer determines predicted classes by categorizing the input image, which is accomplished by combining all the previous layers’ features.

Image recognition, image classification, object detection, and face recognition are just a few of the applications for CNN. The most important section in CNN is the feature extraction section and classification section.

Recurrent Neural Network (RNN)

RNN is a deep learning technique that is both effective and robust, and it is one of the most promising methods currently in use because it is the only one with internal storage. RNN is useful when it is required to predict the next word of sequence. When dealing with sequential data (financial data or the DNA sequence), recurrent neural networks are commonly used. The reason for this is that the model employs layers, which provide a short-term memory for the model. Using this memory, it can more accurately determine the next data and memorize all the information about what was calculated. If we want to use sequence matches in such data, we’ll need a network with previous knowledge of the data. The output from the previous step is fed into the current step in this approach. The architecture of RNN includes three layers: input layer, hidden layer, and output layer. The hidden layer remembers information about sequences.

If compare RNN with a traditional feed-forward neural network(FNN), FNN cannot remember the sequence of data. Suppose we give a word “hello” as input to FNN, FNN processes it character by character. It has already forgotten about ‘h’ ‘e’ and ‘l’ by the time it gets to the character ‘o’. Fortunately, because of its internal memory, a recurrent neural network can remember those characters. This is important because the data sequence comprises important information about what will happen next, that’s why an RNN can perform tasks that some other techniques cannot [

components of data science].

|

| Recurrent Neural Network |

Application areas of RNN include sequence classification such as sentiment classification and video classification, etc. sequence labeling such as image captioning and named entry recognition, etc. and sequence generation such as machine translation, etc. Recurrent Neural Network is useful in time series prediction, and it has flexibility for handling various types of data.

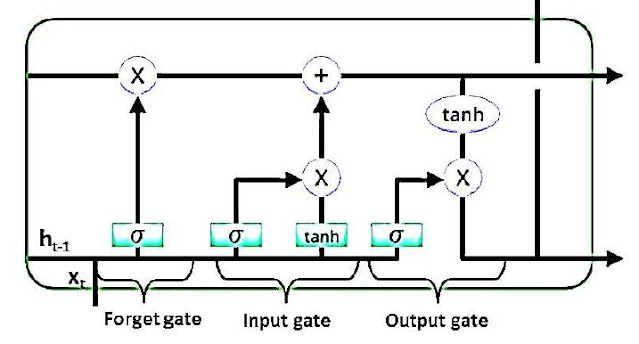

Long Short Term Memory (LSTM)

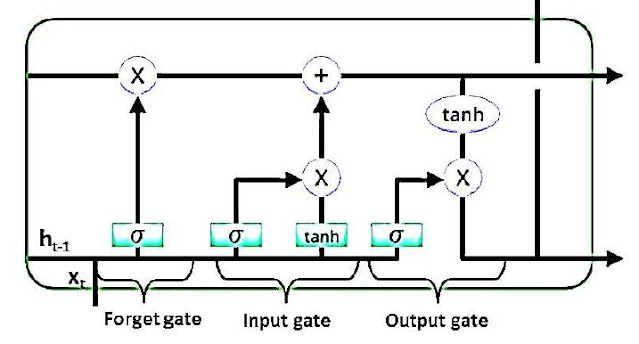

LSTM is a difficult technique in deep learning to master. LSTM has feedback connections, unlike traditional feed-forward neural networks. It can process entire data sequences such as speech or video, as well as single data points such as images. LSTM overcomes the problems of the RNN model. RNN model suffers from short-term memory. RNN model has no control over which part of the information needs to be carried forward and how many parts need to be forgotten. A memory unit called a cell is utilized by the LSTM which can maintain information for a sufficient period. LSTM networks are a type of RNN that can learn long chains of dependencies. LSTM has different memory blocks called cell which carries information throughout the processing of the sequence. The two states that are input to the next cell are the cell state and the hidden state. Three major techniques, referred to as gates, are used to manipulate this memory. A typical LSTM unit consists of a cell or memory block, an input gate, an output gate, and a forget-gate. The information in the cell is regulated by the three gates, and the cell remembers values for arbitrary periods. This model contains interacting layers in a repeating module.

Forget-gate layer is responsible for what to keep and what to throw from old information. Data that isn’t needed in LSTM to comprehend the information of low significance is removed by multiplying a filter. This is mandated for the LSTM network’s effectiveness to be optimized.

The input gate layer manages of determining what data should be stored in the cell state. To control what values should be assigned to the cell state, a sigmoid function is used. In the same way that the forget-gate filters all the data, this one does as well. The cell state is only updated with information that is both important and not useless.

|

| LSTM architecture |

The output gate determines the selection and

displaying valuable data about the current state of the cell. The cell is first used to generate a vector using the tanh function. The data is then filtered using the sigmoid function

LSTMs hold great promise for solving problems that involve sequences and time series.

Due to the variety of

writing styles used in multiple languages, text recognition is a difficult task. In this post, we have looked at how text recognition works and how different steps like

segmentation, feature extraction, and

classification are used. Deep learning

approaches have also been explained, which are beneficial in text recognition. The analysis in this post revealed that there is still scope for improvement in both the algorithms and the word recognition rate.

COMMENTS