A deep learning algorithm is a neural network with more than three layers, including inputs and outputs. Deep learning has recently been used in several research areas, yielding cutting-edge results. DL algorithms perform admirably on large amounts of data [what is big data?], whether labeled or unlabeled. Nowadays, a large amount of data is available, both supervised and unsupervised, in audio, CSV files, images, and text [What is data science?]. This large amount of data can be used to train DL algorithms, which improve model performance [artificial intelligence business].

|

| Deep learning algorithm |

Using the conventional method, predictions in data and feature extraction is a challenging task. In this deep learning era, the above scenario has changed, as the DL algorithms can learn from the data automatically, like finding the patterns in data using many hidden layers. And more hidden layers are used to understand the data at a high level and more effectively [impact of ai in business]. Earlier problems in research areas that took a lot of time for feature extraction, preprocessing, and data predictions are easily solved using DL algorithms with the help of computation power. The computational resources available in today’s world are one of the biggest advantages of deep learning. Because of the available computational resources, the models’ time is less than the traditional ML approach. Because a large amount of data is available, the depth in the neural network models has increased significantly, allowing for more data abstraction such as patterns, edges, and features from the model’s input. Another advantage of DL algorithms is that they can learn from unlabeled data and only require a large dataset. If the dataset is small, then data augmentation can be done to increase the size of the dataset [components of data science]. After observing an improvement in the performance of research projects using DL algorithms. Now many research areas started adapting deep learning in many areas such as medical imaging, voice assistants, self-driving cars, image recognition, fraud detection, advertising, finance, etc [Machine Learning Introduction]. For example, medical imaging predicts whether a patient’s cardiovascular disease is normal or abnormal.

Similarly, in healthcare, many critical diseases can be predicted or detected early using medical images such as X-rays, CT scans, MRI scans, and so on. Recently, DL algorithms detected the outbreak of the covid19 pandemic using CT scans of patients. Another example of deep learning in 5G and mobile networks, mobile devices are increasing exponentially, and processing data between mobile and network is a difficult task with low battery utilization [Reinforcement Learning]. DL is being used to address these issues and improve the performance of mobile devices. Barz et al. proposed a new approach for training DL algorithms on small datasets. The data given to DL algorithms are typically very large, but the algorithms do not perform well with small datasets. Obtaining data in some research areas is indeed costly. Changing the loss function from categorical to cosine loss improves model performance for small datasets.

Overview of Deep learning algorithm architectures

Supervised Architectures

Supervised learning is a method in which the data has been given to the model or algorithm is clearly labeled, and the output of the model or algorithm is classified as correct or not [supervised machine learning]. Here the dataset is split in a ratio required for training the model for the prediction, the validation set is used to check the performance of the model and try to improve it as high as possible, and the remaining dataset is used for the evaluation of the algorithm or model in terms of prediction and effectiveness. The metrics used to evaluate any supervised algorithms are the confusion matrix, root mean squared error and others. The most important metric for classification is accuracy. There are two types of supervised algorithms: classification and regression. These algorithms are used for dataset predictions and work on labeled data [machine learning algorithms]. The predicted outputs in classification are discrete values such as binary classes like 0 or 1, multiple binary classes like play or not play, cat or not a cat, dog or not dog, and multi-class if more than three classes. One example of a multi-class classification is theMNIST dataset. The images are handwritten numbers, and the objective is to predict the numbers from 0 to 9, resulting in a 10-class classification. An example of binary classification is spam emails, which classify the output as spam or not. Accuracy is the most commonly used metric for classification problems.

In regression algorithms, the predicted outputs are continuous values such as scored labels. One of the differences between both algorithms is, classification is used to predict the set of classes, and regression is used to predict the quantity. One example is weather prediction, where the model is trained on previous weather data and predicts future weather days. For regression algorithms or models, the most common metric is RMSE.

Convolutional Neural Networks

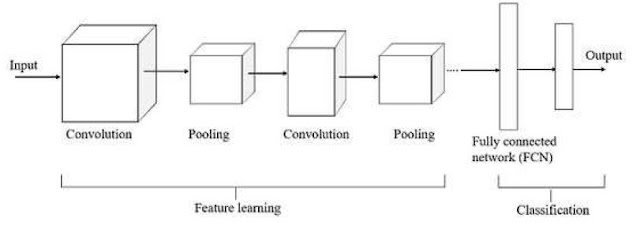

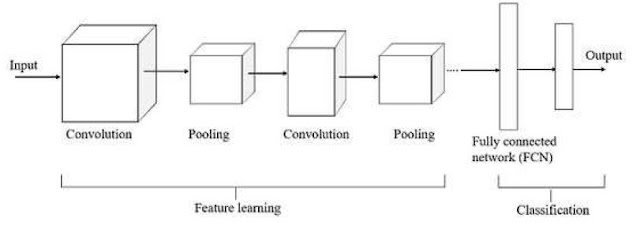

CNN is a multilayered neural network, which is particularly used for images. Usually, Neural networks aren’t built to extract features from images and aren’t capable of doing. So, convolutions and pooling are used to extract features from the images, and they can’t perform classification so, we need fully connected layers to classify the data.

|

| Convolutional Neural Network |

Fig. 1 shows multiple layers of convolutions and pooling, and the more layers there are, the better the algorithm, and these layers are used to extract features from images. Pooling is used to reduce the dimensionality of features. Max pooling is commonly used because it selects the most information from the extracted features. Following that, we used to flatten, softmax, and FCN layers; we can use dense layers in a regression model. The most difficult challenge in traditional ML algorithms is feature extraction from images, but CNN automatically extracts features and learns from them.

Recurrent Neural Networks

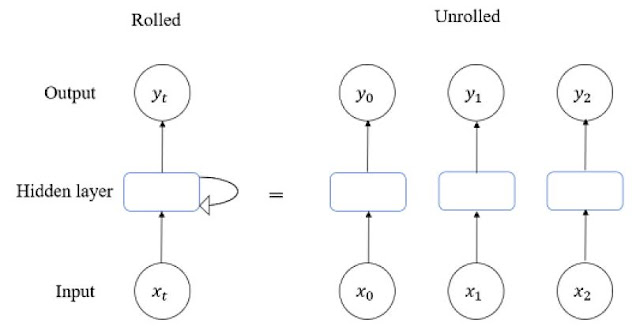

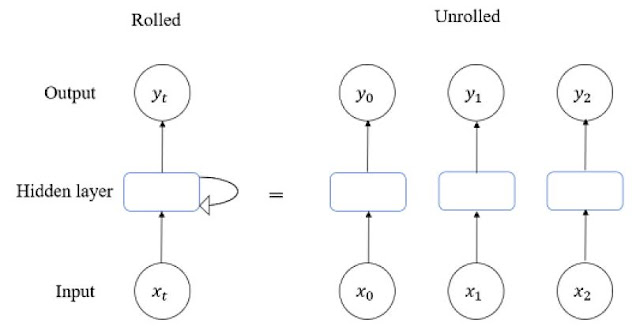

Assume the objective is to predict the letter or word in a sentence. Typically, in CNN, there are many hidden layers, and each layer is independent so, the weights and activations are different for each layer. Now using CNN to predict the next word in a sequence is difficult because the layers are independent. All the hidden layers are combined in the recurrent network as they have the same bias and weights. Lets say we have the sentence nature is beautiful in this sentence. After giving the first three words as input to the RNN, it must predict the fourth word called beautiful. To predict the fourth word, it has to maintain the memory of the previous inputs. So, in RNN, all the hidden layers are rolled and combined.

|

| Recurrent Neural Network |

Long Short-Term Memory

As shown in fig 3, RNN can predict the next word of the sentence or the next letter in a word. It can predict only if the sentences or the data input to the network is small [Data science workflow]. If the large corpus is given as input to the network, it cannot predict the sequence word for that sentence. Because RNN saves the input data for the short term, it means if ten lines of text data are given input, it will be kept on replacing the existing data because it doesn’t know which word is important and which word is not important. Using LSTM, we can overcome the above problem. LSTM has a memory cell that remembers the important data and saves it in a memory cell for a long time. The memory cell has three gates that are useful for the prediction of words even with large inputs. The input gate in the cell will maintain what information needs to be sent or not; the output gates will maintain when to send the outputs from the cell. The forget gate will decide which information is important and what to store and what to forget in the input data. As shown in Fig. 3, the cell will maintain the weights to control these gates.

|

| deep learning application |

Gated Recurrent Unit

GRU is similar to LSTMs in that it is very easy to train and simpler than LSTM. It has an update gate that maintains the contents of the previous cell and a reset gate that determines how much information to forget in previous cells.

Unsupervised Architectures

In Unsupervised learning, the data provided to the model is unlabeled. Here the goal is to predict the patterns or structure in the dataset and divide the data into similar groups to get some insight into the data, also known as clustering. And understanding the data, how it is distributed in space is known as density estimation. And autoencoders are used for ignoring the noise in data, typically for the dimensionality reduction in the unlabeled data. Usually, these methods are applied when the dataset is very large and too expensive to label the data. Then deep learning comes into handy for these types of problems in the unlabeled data [deep learning recommender system].

Autoencoders

|

| Autoencoders |

Autoencoders are an unsupervised learning method used for data encoding and decoding; it is also known as the ANN [

unsupervised machine learning]. Autoencoders are popular for the bottleneck layer in the network, which can be built by using encoding functions. It has three layers; the first layer is the input layer which takes the input and passes to the bottleneck layer or hidden layer; the second layer is the bottleneck layer or hidden layer; as shown in Fig. 4, the hidden layer is significantly smaller compared to the input layer. Because the encoder function is used for the compressed representation of data, which forces the network to remove the noise or redundant information. The third layer is the output layer using the decoder function. It reconstructs the input layer. And finally, an error function is used to compute the difference between the input and output layers.

Restricted Boltzmann Machines

|

| Restricted Boltzmann Machine |

RBM is a two-layered neural network, and it is similar to the Boltzmann machine. RBM has two layers. One is the visible layer or input layer, and the other is the hidden layer. Here, all the neurons in the visible layer are connected to every node in the hidden layer in a bipartite graph manner. But in traditional BM, it is symmetrically connected like all nodes in the visible layer are connected within the layer and every node in the hidden layer. During the training, the probability distribution is calculated using a stochastic approach. And each layer has a bias, the visible bias is used to reconstruct the input layer, and hidden bias is used to build the activation in the forward pass. RBMs are mostly used for dimensionality reduction.

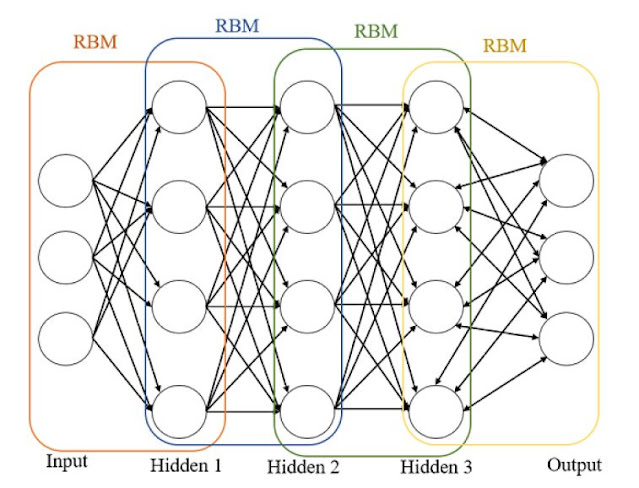

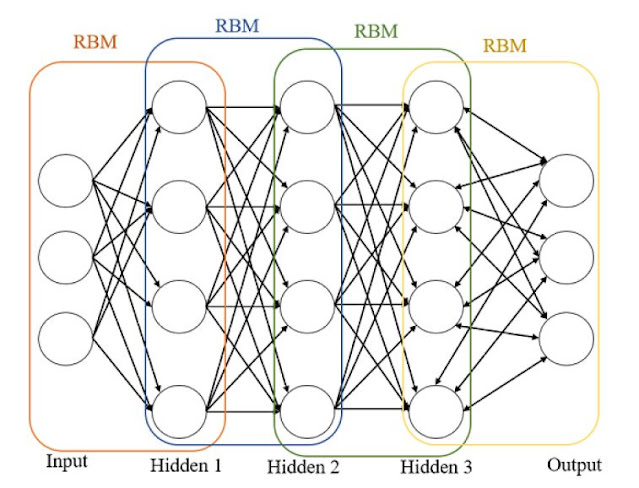

Deep learning algorithm: Deep Belief Networks

|

| Deep Belief Network |

DBN is a deep multilayer network architecture, which has many hidden layers. And in DBN, each pair of linked layers is an RBM. Here the train ing phase is done using an unsupervised manner, and fine-tuning is done using supervised. In the training phase, the input layer takes the input from the data and passes it to the first hidden layer; the pair of these two layers is an RBM. Similarly, the first hidden layer acts as input to the second hidden layer, and the procedure continues till the model is trained. In the output layer, the network classification is done for labeling the nodes in the layer. After applying the backpropagation or gradient descent to the network, the training process is complete.

Applications of Deep Learning

The following are some applications of deep learning which are growing very fast recently.

Natural Language Processing

NLP is used to analyze the text in a computerized approach [

machine learning text recognition]. The main goal is to understand and process the human language. Even though many types of research are going on actively, the goal of NLP is not yet achieved. In classical NLP, most work is done manually, like performing preprocessing such as tokenization, lemmatization, removing stop words, etc. The next feature extraction is done, and some models or algorithms are applied along with inference and output. All these steps are done manually, and there might be some mistakes or missing out on some good features that can happen while performing manually. Coming to NLP using DL, the most challenging task like selecting features and preprocessing is done automatically as it learns features independently. DL algorithms filter out the best features and try to increase the performance at the highest level. The response time of DL algorithms is very less compared to the traditional or classical NLP algorithms. The advantages of using DL over classical NLP are getting the highest level of accuracy, doing challenging tasks on its own, and responding with very little time.

Araque et al. proposed a novel approach integrating a traditional model with the DL technique, giving a better F1 score than the deep learning technique. First, they have built a DL algorithmwith word embeddings and next built the two-ensemble technique, which is mostly used in NLP and later combined both models and has taken the best feature compared to the deep learning technique. Majumder et al. developed a CNN model in which text essays are input to extract personality traits from the documents. They proposed five networks, all of which are binary classifiers, the input text corpus is converted to n-gram vectors. Finally, the model predicts whether the traits are positive or negative; these vectors are given to FCN, which classifies the document to either one of the traits.

Healthcare

Deep learning is reshaping the healthcare industry by developing new possibilities to improve people’s lives. DL is used in medical imaging, computer-aided disease detection, genome analysis, discovering new drugs, etc. Many diseases have been identified using DL, like finding a tumor in the brain, identifying the blockage in the heart, vessel blockage in the retinal, and finding the abnormalities using x-rays, CT scans, and MRI images. DL is also applied in IoT devices to monitor the ECG, heart rate, and medical diagnosis. Khened, M et al. A CNN model with the ensemble of classifiers to detect cardiac diseases using the volume estimation of the left ventricle. Their algorithms have shown 100% accuracy in the classification of diseases. The images taken are in Dicom format and a short-axis view of the heart. In this paper, DL is used to detect cardiac diseases using MRI images. Jindal V et al. applied DL in wearable devices for

monitoring the real-time data. Applying the DL on low-power devices is a challenging task; the proposed design in this paper can overcome the above limitation. They have combined external network and inertial sensor data features, and they have used spectral-domain preprocessing to optimize DL on the devices.

Image Recognition

CNN is extensively used in the image and video recognition area. In recent years, the growth in image processing using DL has been increased a lot due to the extensive results in the classifications. The widely used DL algorithm for images is CNN, it has multiple layers of convolutions and multiple pooling layers, which are used to reduce the size of images and extract the best features from the image, and after learning the features, the results are fed to the FCN which classifies the images. This image recognition process is applied in many areas like medical, mineral detection, autonomous and biometric, etc. Srinivasa K et al. applied CNN and LSTM to detect the expressions in the video or images. In todays world, a massive amount of multimedia data is available; this paper aims to detect facial expressions in an image or frame. For detecting the expressions, the movement of facial muscles and eye blinking is also important. Traditional ML methods such as KNN, SVM, K- means are already implemented, but they cannot process the large data and capture each frame in a video and aggregate the output. Using LSTM and CNN, it can capture each frame in a video and analyze the expression with a range between 1 100. Finally, it aggregates all the frames using the abovementioned DL algorithms, and a large amount of data can also be handled. Iliadis M et al. proposed a framework for recovering the video frames using deep learning. Using DL, the quality of reconstructing the video frames has significantly improved compared to traditional ML methods. And the frames are restored in a matter of seconds using the method described. After adding more layers to the network, the performance also significantly improved.

Autonomous or Self-Driving Cars

In the automobile industry, deep learning is forced into the self-driving car. Many research in self-driving cars has been significantly increasing. The key issues to build a self-driving car are the dataset and the network. A massive amount of data is required to train the DL model, consisting of cars, pedestrians, footpaths, etc.; all these data cannot be found in a single dataset. Even after collecting such a massive dataset, there are still cases like damaged roads, accidents, etc., which are very hard to solve. The next issue is a neural network; after building the model with many deep layers, getting a high-level accuracy is challenging. Even if the training model is 100% accurate, there is no guarantee that no errors will occur. Maqueda A et al. have used event cameras that capture dynamic vision without redundant information. Event cameras capture the moving edges in a frame; the images are low-latency and high dynamic range, which is an advantage compared to traditional cameras. Using, these images CNN is applied to predict the steering angle of the vehicle. Similarly, Ramos et al. proposed an obstacle detection framework using DL to detect small road hazards. In this paper, the proposed FCN network predicts the obstacles of even 5cm height on the road, and the network’s performance has significantly improved.

Cybersecurity

Since the number of treats in the network has risen dramatically, several companies worldwide are looking for new solutions to reduce the number of treats in internet-connected devices. These treats can be detected using conventional methods like host security and network security systems. The security models used to detect the threats are firewalls, antiviruses, intrusion detection systems, etc. Using DL, the time taken to analyze and identify the features in the malware is reduced. DL algorithms can identify the features in the malware attacks in a robust way. The use of DL has improved the detection of new types of network attacks as it is done automatically and saves a lot of time in feature engineering. Dali Zhu et al. say that Android is open-source, allowing many hackers to create new malware that cannot be detected using traditionalML methods. In this paper, deep flow, a novel DL method, is used to access the data directly from the applications that detect the malware. The proposed method has given a high F1 score compared to the conventional methods.

Speech Recognition

Neural networks applied to speech recognition in the early ‘90s, which was popular back then but couldn’t outperform the Gaussian mixture models (GMMs). Lately, deep neural networks (DNN) became popular because of the availability of large training data, new architectures of DNN, computing power, and activation functions. The deeper the network, the powerful the model, but the training was slow even with GPUs. Alternatively, RBMs can also be used for training the data. Huang J et al. applied DBN to the audio-visual speech recognition (AVSR) over traditional GMM and Hidden Markov model (HMM) methods. The features extracted using DBN show better results compared to GMM/HMM. So, they proposed a method that combines features of AVSR using DBN with GMM has shown significant results, which reduced the error rate by 21%. Noda K et al. proposed an integrating HMM model. First, the audio features are extracted using autoencoders, and after pre-processing, the features are denoised. Secondly, the visual features are extracted using CNN, which gives

robust features. And in the end, these acquired features are integrated using HMM.

Automatic Coloring

Coloring the grayscale images is a challenging task. It can be done manually with human intervention, which is a time taking and difficult task. DL can be used to colorize the black and white images by using the context and objects in the image. This can be done using the pre-trained model on the ImageNet dataset, a very large and high-quality dataset, and supervised layers are added to recreate the image in color. The colorization using DL algorithms is visually impressive and very fast compared to the conventional methods. Using this approach, the frames of the black and white movies can also be colored. Larsson G et al. developed a fully automatic DL network for coloring the grayscale images. Using DL, coloring the images is less time-consuming and cost-efficient. The proposed models predict per pixel color histogram, which gives a color image as output automatically.

An overview of deep learning is provided in this post. Deep learning is growing fast in many areas. Researchers widely adopt it with a significant increase in performance because of large data available for training, deeper architectures, more

computing power, and many libraries available for the DL techniques. One of DL’s major advantages is that it automatically learns the robust features of the data, which are very hard to retrieve using traditional methods. In this post, a brief explanation of widely used networks and algorithms is

mentioned. Many applications have been using deep learning, and some of the applications are mentioned in this post. Deep learning has a

lot of potentials to grow in many areas because of less human intervention.

artificial intelligence business

COMMENTS